Rollback Friendly Audio

For an overview of rollback netcode, watch Core-A Gaming's video on it.

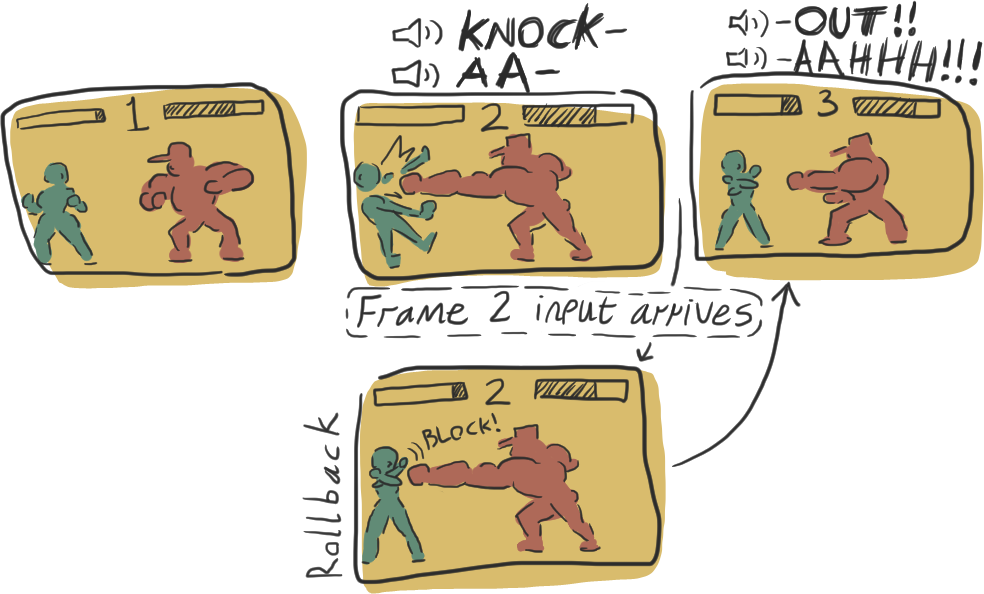

Rollback netcode requires that game state can be duplicated. Each frame needs to be saved into a history buffer so the game can go back a few frames and resimulate when an input arrives late and is different than expected. For example, if the opponent's input for frame 2 arrives on frame 6, we go back in time and resimulate frames 2, 3, 4, and 5 with the new information, then simulate and render frame 6. This is easy if the game state is made up of statically sized arrays and no pointers that reference memory inside the game state:

struct EntityHandle {

int id;

int index;

};

struct Entity {

Vector3 position;

int state;

EntityHandle target;

};

struct GameState {

Entity entities[64]

int randomSeries;

};

This makes it trivial to copy the game state for every frame. It's also easy with a pure functional style of game update that always returns a new game state instead of changing the old one.

frames[n+1] = updateGameState(frames[n]);

Things get complicated quickly when pointer-filled game state is being updated through modifications. Michael Stallone did a great GDC talk on the difficulty of integrating rollback netcode into Netherrealms Studio's object-oriented game engine.

Only state that changes depending on inputs needs to be stored for each frame. The game will always catch back up to real-time after a rollback, so things like a waterfall's animation in the background can seamlessly ignore rollback. There probably won't be big enough differences before and after rollback to warrant re-rendering every in-between frame for temporal graphics effects like TAA.

Audio is a different challenge, with a dependency on player input and running asynchronously with gameplay. Starting and stopping sounds requires separate timers from the usual game time step. Games usually handle this just fine, but rollback adds an unusual requirement to an audio system: changing what sounds started playing in the past. Not being able to cancel a sound that a rollback determined should not have happened would be very distracting.

In early versions of King of Fighters 15, sounds would continue to play after a rollback. Especially noticeable if a K.O. was rolled back.

I felt like most of my game engine components would work well for a game with rollback, but realized I had no idea how to do the audio. I wanted to replace DirectSound and its weird bugs anyway, so I began thinking about how to make my ideal audio system.

There were four axes along which I wanted to optimize audio for rollback:

- Latency: speakers should begin playing audio right after a sound is triggered in game.

- Performance: avoid doing unnecessary work on the CPU.

- Rollbackability: it should be possible to go back in time to a previous frame's audio state and play sounds at the correct time while fast-forwarding.

- Simplicity: the code should be clear and easy to understand. Reduce the chance of memory bugs and have no possibility of race conditions.

Playing Audio

To output audio, we ask the operating system for a buffer and fill it with audio samples. There are various levels of abstraction to do this, but the lowest level thing on Windows is WASAPI.

To get a buffer, we need a handle to an audio device, such as headphones or a laptop's built-in speakers. To get a device, we need an enumerator. This can list all of the audio devices connected to the system, but WASAPI's has a convenient method to get the user's primary device, or "default endpoint."

With a device we can create an audio-client with a specific format, and a render-client that actually gives us the buffer for writing samples. In my game I use floating point for samples in the [0, 1] range, since it offers plenty of overhead when combining sounds and makes the math code simple.

The client's period hints how long we want the buffer to be. This directly impacts latency.

With everything set up, we can write audio samples in a loop. First we get the "padding," which indicates how much of the buffer is unplayed. We then get a pointer and write to the played region of the buffer. The following example program shows all these steps and outputs a sine wave.

minimal_wasapi.cppraw

// Compile: cl minimal.cpp /link ole32.lib #include <windows.h> #include <inttypes.h> #include <math.h> #include <mmdeviceapi.h> #include <audioclient.h> #define BYTES_PER_CHANNEL sizeof(float) #define CHANNELS_PER_SAMPLE 1 #define SAMPLES_PER_SECOND 44100 int main() { CoInitializeEx(nullptr, COINIT_DISABLE_OLE1DDE); IMMDeviceEnumerator* enumerator; CoCreateInstance(__uuidof(MMDeviceEnumerator), NULL, CLSCTX_ALL, __uuidof(IMMDeviceEnumerator), (void**)&enumerator); IMMDevice* endpoint; enumerator->GetDefaultAudioEndpoint(eRender, eMultimedia, &endpoint); IAudioClient* audioClient; endpoint->Activate(__uuidof(IAudioClient), CLSCTX_ALL, NULL, (void**)&audioClient); REFERENCE_TIME defaultDevicePeriod, minDevicePeriod; audioClient->GetDevicePeriod(&defaultDevicePeriod, &minDevicePeriod); WAVEFORMATEX format = {0}; format.wFormatTag = WAVE_FORMAT_IEEE_FLOAT; format.nChannels = CHANNELS_PER_SAMPLE; format.nSamplesPerSec = SAMPLES_PER_SECOND; format.wBitsPerSample = BYTES_PER_CHANNEL*8; format.nBlockAlign = (CHANNELS_PER_SAMPLE*BYTES_PER_CHANNEL); format.nAvgBytesPerSec = SAMPLES_PER_SECOND*format.nBlockAlign; audioClient->Initialize(AUDCLNT_SHAREMODE_SHARED, AUDCLNT_STREAMFLAGS_AUTOCONVERTPCM, defaultDevicePeriod, 0, &format, 0); IAudioRenderClient* renderClient; audioClient->GetService(__uuidof(IAudioRenderClient), (void**)&renderClient); uint32_t bufferSampleCount; audioClient->GetBufferSize(&bufferSampleCount); audioClient->Start(); uint64_t sampleTime = 0; while (1) { uint32_t paddingSampleCount; audioClient->GetCurrentPadding(&paddingSampleCount); uint32_t sampleCount = bufferSampleCount - paddingSampleCount; float* buffer; if (SUCCEEDED(renderClient->GetBuffer(sampleCount, (BYTE**)&buffer))) { // Sine wave float toneHerz = 500; for (uint32_t i=0; i<sampleCount; ++i) { buffer[i] = sinf(sampleTime*3.14159f*toneHerz/SAMPLES_PER_SECOND); sampleTime += 1; } renderClient->ReleaseBuffer(sampleCount, 0); } } }

We need to write to the buffer frequently enough that it won't run out of audio to play. For a buffer of 1000 samples being played at 44100 samples per second, we would need to write every frame at 60fps. Any slower than that and the player could hear drops in the audio.

Games often have periods of stuttering slowdown on even the latest hardware, but audio usually continues playing smoothly. We need a better strategy than expecting to run at a perfect frame rate. One solution would be increasing the size of the buffer. For example, a 50ms buffer would play fine down to 20fps. The downside is this introduces latency: the audio data the game outputs will start playing up to 50ms in the future.

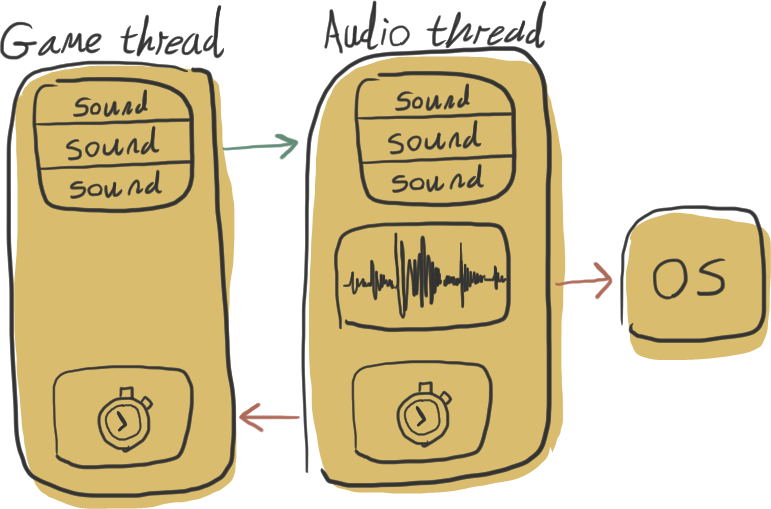

A small audio buffer optimizes for low latency. To write to a small buffer frequently, most games use a dedicated audio thread. The OS can wake the thread when the audio buffer is low on unplayed samples.

Following is mostly the same example, but this time playing on a separate thread and using an event to wake it.

audio_thread.cppraw

// Compile: cl audio_thread.cpp /link ole32.lib #include <windows.h> #include <inttypes.h> #include <math.h> #include <mmdeviceapi.h> #include <audioclient.h> #define BYTES_PER_CHANNEL sizeof(float) #define CHANNELS_PER_SAMPLE 1 #define SAMPLES_PER_SECOND 44100 DWORD WINAPI audioThread(void* param) { CoInitializeEx(nullptr, COINIT_DISABLE_OLE1DDE); HRESULT hr; IMMDeviceEnumerator* enumerator; hr = CoCreateInstance( __uuidof(MMDeviceEnumerator), NULL, CLSCTX_ALL, __uuidof(IMMDeviceEnumerator), (void**)&enumerator); IMMDevice* endpoint; hr = enumerator->GetDefaultAudioEndpoint(eRender, eMultimedia, &endpoint); IAudioClient* audioClient; hr = endpoint->Activate(__uuidof(IAudioClient), CLSCTX_ALL, NULL, (void**)&audioClient); REFERENCE_TIME defaultDevicePeriod, minDevicePeriod; hr = audioClient->GetDevicePeriod(&defaultDevicePeriod, &minDevicePeriod); WAVEFORMATEX format = {0}; format.wFormatTag = WAVE_FORMAT_IEEE_FLOAT; format.nChannels = CHANNELS_PER_SAMPLE; format.nSamplesPerSec = SAMPLES_PER_SECOND; format.wBitsPerSample = BYTES_PER_CHANNEL*8; format.nBlockAlign = (CHANNELS_PER_SAMPLE*BYTES_PER_CHANNEL); format.nAvgBytesPerSec = SAMPLES_PER_SECOND*format.nBlockAlign; hr = audioClient->Initialize(AUDCLNT_SHAREMODE_SHARED, AUDCLNT_STREAMFLAGS_EVENTCALLBACK | AUDCLNT_STREAMFLAGS_AUTOCONVERTPCM, defaultDevicePeriod, 0, &format, 0); HANDLE wakeAudioThreadEvent = CreateEventA(0, false, false, 0); hr = audioClient->SetEventHandle(wakeAudioThreadEvent); IAudioRenderClient* renderClient; hr = audioClient->GetService(__uuidof(IAudioRenderClient), (void**)&renderClient); uint32_t bufferSampleCount; hr = audioClient->GetBufferSize(&bufferSampleCount); uint64_t sampleTime = 0; hr = audioClient->Start(); while (WaitForSingleObject(wakeAudioThreadEvent, INFINITE) == WAIT_OBJECT_0) { uint32_t paddingSampleCount; audioClient->GetCurrentPadding(&paddingSampleCount); uint32_t sampleCount = bufferSampleCount - paddingSampleCount; float* buffer; if (SUCCEEDED(renderClient->GetBuffer(sampleCount, (BYTE**)&buffer))) { // Sine wave float toneHerz = 500; for (uint32_t i=0; i<sampleCount; ++i) { buffer[i] = sinf(sampleTime*3.14159f*toneHerz/SAMPLES_PER_SECOND); sampleTime += 1; } renderClient->;ReleaseBuffer(sampleCount, 0); } } return 0; } void main() { CreateThread(0, 0, audioThread, 0, 0, 0); Sleep(INFINITE); }

Playing Waveforms

Sounds and music played in a game are stored as a series of samples, making a waveform. To play sounds we zero out the audio output buffer, then sum up waveform samples into it, called mixing.

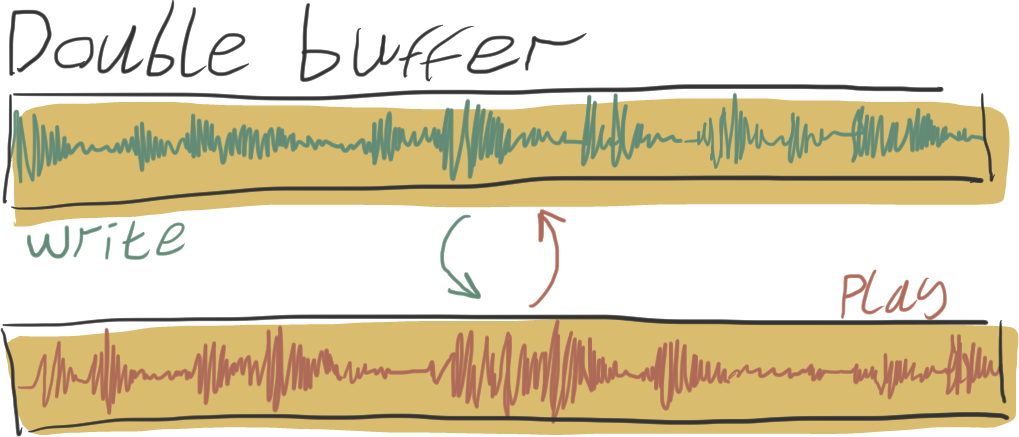

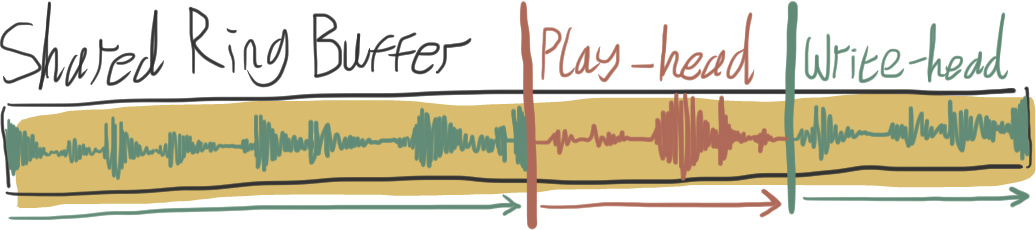

Having an audio thread makes accessing waveform assets complicated. Audio in games is always playing, even when loading and unloading assets. We need to be sure that the audio thread is not trying to write out a waveform while that waveform is being unloaded.One way to approach this problem is to mix sounds into an output buffer on the gameplay thread, avoiding multithreaded access of assets entirely. Then we have the problem mentioned before with inconsistent frame rates causing audio glitches. We can mitigate it by writing more audio than necessary per frame. If the gameplay thread mixes 100ms worth of audio instead of 16ms worth, the game could drop to 10fps before audio starts dropping.

To prevent this from introducing a lot of latency, there are a few strategies we could use:

Mix in the gameplay thread and exchange buffer pointers with the audio thread when finished.

A play-head marks where the audio thread is reading from and a write-head marks where mixed audio can be written to. The write-head stays just in front of the play-head. This is how it's done in DirectSound, a Windows API that wraps WASAPI.

With either method, the audio thread copies the mixed audio into the OS's output buffer. The downside is we're mixing more audio than we need to. If the game is currently running at 16ms per frame, that's 84ms worth of audio that goes unused. On the other hand, computers are extremely fast, and the wasted work might make no difference if the game is already well within its CPU budget.

Since one of my goals was to optimize for CPU usage, I wanted to only mix audio that would actually be played. To do that, I needed to mix on the audio thread.

Managing Assets

When multithreading, I don't want to just say "make sure to stop all playing sounds before unloading them." I want to be sure that race conditions will never happen.

With audio mixing on its own thread, rendering audio becomes similar to rendering graphics. OpenGL, for instance, gives user code handles to buffers that are managed on the GPU side of things. We could do the same with audio, giving the audio thread control over waveform lifetimes, while the gameplay thread refers to them using handles.

The multithreaded operations I needed were

- Add a waveform asset and return a handle to it.

- Mark the waveform asset for deletion that corresponds to a handle.

- Get the waveform asset that corresponds to a handle.

Waveforms are loaded by the gameplay thread or by dedicated asset loader threads. Either way, adding an asset must be thread-safe. Marking for deletion must be as well, but the audio thread can free the memory synchronously after it's submitted the output buffer. In my engine, there's no way to have a handle on the gameplay thread after marking it for deletion, so temporarily getting the read-only asset is not a concern.

By imposing an upper limit on how many waveforms can be loaded at once, an array is enough to store waveforms across threads. I went for a lock-free implementation to prevent the audio thread from potentially hanging while waiting to acquire a mutex.

waveform_manager.cppraw

#include <windows.h>

#include <inttypes.h>

#include <assert.h>

typedef int16_t MonoSample;

struct StereoSample

{

int16_t left;

int16_t right;

};

struct Waveform

{

uint32_t sampleCount;

MonoSample* monoSamples;

StereoSample* stereoSamples;

};

struct WaveformHandle

{

uint64_t id;

uint32_t index;

};

struct WaveformManager

{

static const uint32_t maxSlots = 1024;

struct Slot {

Waveform* waveform;

uint64_t id;

};

Slot slots[maxSlots];

uint64_t previousWaveformId;

};

WaveformHandle addWaveform(WaveformManager* waveformManager, Waveform waveform)

{

Waveform* waveformPtr = (Waveform*)malloc(sizeof(Waveform));

memcpy(waveformPtr, &waveform, sizeof(Waveform));

for (uint32_t i=0; i<WaveformManager::maxSlots; ++i) {

void* result = InterlockedCompareExchangePointer((void**)&waveformManager->slots[i].waveform, waveformPtr, 0);

if (result == 0) {

waveformManager->slots[i].id = InterlockedIncrement(&waveformManager->previousWaveformId);

WaveformHandle handle = {0};

handle.id = waveformManager->slots[i].id;

handle.index = i;

return handle;

}

}

assert(!"Waveform manager is full; exceeded max loaded waveforms.");

return {0};

}

Waveform* getWaveform(WaveformManager* waveformManager, WaveformHandle handle)

{

return (handle.index < waveformManager->maxSlots && waveformManager->slots[handle.index].id == handle.id)? waveformManager->slots[handle.index].waveform : 0;

}

void markWaveformForRemoval(WaveformManager* waveformManager, WaveformHandle handle)

{

waveformManager->slots[handle.index].id = ULLONG_MAX;

}

void updateWaveformManager(WaveformManager* waveformManager)

{

for (uint32_t i=0; i<WaveformManager::maxSlots; ++i) {

if (waveformManager->slots[i].id == ULLONG_MAX) {

free(waveformManager->slots[i].waveform->monoSamples);

free(waveformManager->slots[i].waveform->stereoSamples);

free(waveformManager->slots[i].waveform);

waveformManager->slots[i].id = 0;

MemoryBarrier();

waveformManager->slots[i].waveform = 0;

}

}

}

Game Audio Interface

Audio libraries tend to have interfaces where you play sounds with a function: soundHandle = playSound(waveFile). Likewise, you would update and stop sounds by calling functions that take the sound handle.

This means the list of playing sounds is managed by the audio library without a clear way to roll back to a previous state. When a rollback is needed, the game would have to tell the audio library to stop playing some sounds, roll back, and then start playing sounds again as we go through the frames.

How would the audio library know at what point in time a sound started? Rollback cuts off the beginning of animations; it should also cut off the beginning of sounds so nothing is delayed.

How could we identify which sounds started before the rollback so we don't lose those?

How could we make sure sounds are re-played at the exact same point? Even one sample off is noticeable.

Answering these questions could get complicated, especially if the library is trying to be a blackbox. To optimize for simplicity, what I really wanted was a plain data structure of audio state that could be saved and duplicated.

struct Sound {

AssetHandle waveFile;

uint64_t startedSampleTime;

float volume;

};

struct AudioState {

Sound sounds[50];

};

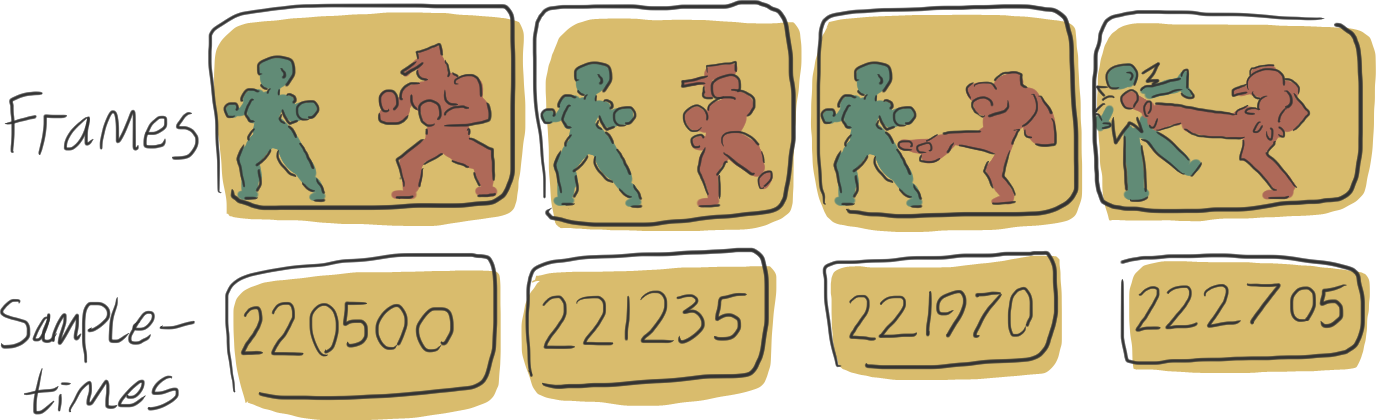

Each frame has its own AudioState that can be trivially copied. startedSampleTime is when the individual sound should start playing. To set this, we need to know where in time the audio thread is currently rendering.

Time Synchronization

Gameplay typically measures time in delta times and frames, which tend to be somewhat variable. Audio, hopefully, plays at a consistent rate no matter what else the game is dealing with. Audio time is measured in samples written and is updated every time we acquire the OS's audio buffer. I call this sample-time, and it counts up forever as long as the audio thread is running.

Alongside the gamestate frame history, a history of sample-times can track when sounds were started per frame, so we can start playing sounds "in the past" during rollback.

Transferring Data Between Gameplay Thread and Audio Thread

The sounds built up over a game update can be copied to a buffer for the audio thread. When the OS wakes the audio thread, it can update its sound state to match the buffer. We play each sound from its startedSampleTime relative to the audio thread's current sample-time.

In the other direction, the audio thread produces a sample-time that the gameplay thread uses to start sounds. To make these as close as possible, I set a sound's startedSampleTime to zero when it's triggered while updating game state, then at the end of the frame, get the audio thread's sample-time and set all sounds with startedSampleTime == 0 to that. I add one period of delay to reduce the chances of cutting off the beginning.

Looping

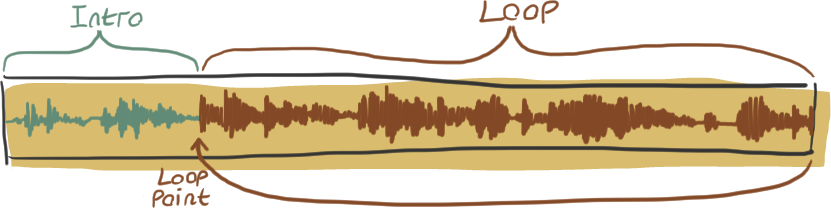

Background music plays in a loop, usually with an intro portion that only plays once. I specify a loop sample index for each song that gets passed to the audio thread. When playing a looping sound past the end of the waveform, it goes back to the loop sample index.

Reverb

After mixing, I apply a reverb filter to the OS output buffer for an echoing effect when in game areas like caves. The filter retains its state between uses, so each time it's applied to the audio buffer, reverb carries over from the last. In a more sophisticated game with 3D sound effects, or to prevent rolled-back sounds from continuing to reverberate, the filter could be applied to individual sounds instead of the whole mixed buffer.

Calculating reverb is serial and relatively expensive. Adding it is one thing that prompted me to switch from the method of filling a buffer with more mixed audio than needed to mixing on the audio thread.

Device Changes

With WASAPI, the audio client is tied to a device. Let's say the user starts our application with headphones as their primary device. When the user switches their primary device from headphones to speakers, the audio will continue playing on headphones. When the user unplugs their headphones, WASAPI functions will return error messages. In both cases I'd rather support what the user is trying to do.

We can create an object extending the IMMNotificationClient interface that listens for device change events. When an event occurs, I wake the audio thread, destroy all the old WASAPI objects, and recreate them with the new default device.

One last problem is when there's no device at all. The game will continue queueing sounds but the audio thread will never wake to play them, and without updated sample-times the gameplay thread won't know when to end them. I wrote a special case for this to time out waiting on the audio thread every second, allowing the thread to advance sample-time without playing any sounds.

This example program uses an audio thread to play a sine-wave song based on user input. It handles device changes and shows the WASAPI wrapper interface I made for my games.

music_box.cppraw

// Compile: cl music_box.cpp /link ole32.lib // Usage: music_box.exe "Hello World" #include <windows.h> #include <inttypes.h> #include <math.h> #include <mmdeviceapi.h> #include <audioclient.h> #define BYTES_PER_CHANNEL sizeof(float) #define CHANNELS_PER_SAMPLE 1 #define SAMPLES_PER_SECOND 44100 // Public API ----------------------------------------------------------------- struct AudioEvents; struct AudioDevice; struct AudioBuffer; AudioEvents createAudioEvents (); void destroyAudioEvents(AudioEvents* events); void endAudioEvents (AudioEvents* events); AudioDevice createAudioDevice (AudioEvents* events); void destroyAudioDevice(AudioDevice* device); bool waitForAudioBuffer(AudioDevice* device, AudioEvents* events, AudioBuffer* out_buffer); void submitAudioBuffer (AudioDevice* device, AudioBuffer buffer); // Implementation ------------------------------------------------------------- struct AudioEvents { volatile bool quit = false; HANDLE wakeAudioThreadEvent; }; struct AudioNotifications : public IMMNotificationClient { // Boiler plate ULONG STDMETHODCALLTYPE AddRef() { return 1; } ULONG STDMETHODCALLTYPE Release() { return 1; } HRESULT STDMETHODCALLTYPE QueryInterface(REFIID riid, VOID **ppvInterface) { return S_OK; } HRESULT OnDeviceAdded(LPCWSTR pwstrDeviceId) { return S_OK; } HRESULT OnDeviceRemoved(LPCWSTR pwstrDeviceId) { return S_OK; } HRESULT OnDeviceStateChanged(LPCWSTR pwstrDeviceId, DWORD dwNewState) { return S_OK; } HRESULT OnPropertyValueChanged(LPCWSTR pwstrDeviceId, const PROPERTYKEY key) { return S_OK; } // Parts that matter volatile bool deviceChanged = false; HANDLE wakeAudioThreadEvent; HRESULT OnDefaultDeviceChanged(EDataFlow flow, ERole role, LPCWSTR pwstrDefaultDeviceId) { if (flow == eRender && role == eMultimedia) { deviceChanged = true; SetEvent(wakeAudioThreadEvent); } return S_OK; } }; struct AudioDevice { uint32_t bufferSampleCount; AudioNotifications* notifications; IMMDeviceEnumerator* enumerator; IMMDevice* endpoint; IAudioClient* audioClient; IAudioRenderClient* renderClient; }; struct AudioBuffer { float* buffer; uint32_t sampleCount; uint32_t elapsedSampleTime; // Same as sampleCount when a device is connected }; AudioEvents createAudioEvents() { AudioEvents events = {0}; events.wakeAudioThreadEvent = CreateEventA(0, false, false, 0); return events; } void endAudioEvents(AudioEvents* events) { events->quit = true; SetEvent(events->wakeAudioThreadEvent); } void destroyAudioEvents(AudioEvents* events) { CloseHandle(events->wakeAudioThreadEvent); } AudioDevice createAudioDevice(AudioEvents* events) { AudioDevice device = {0}; CoCreateInstance( __uuidof(MMDeviceEnumerator), NULL, CLSCTX_ALL, __uuidof(IMMDeviceEnumerator), (void**)&device.enumerator); device.notifications = new AudioNotifications; device.notifications->wakeAudioThreadEvent = events->wakeAudioThreadEvent; device.enumerator->RegisterEndpointNotificationCallback(device.notifications); if (SUCCEEDED(device.enumerator->GetDefaultAudioEndpoint(eRender, eMultimedia, &device.endpoint))) { if (SUCCEEDED(device.endpoint->Activate(__uuidof(IAudioClient), CLSCTX_ALL, NULL, (void**)&device.audioClient))) { REFERENCE_TIME defaultDevicePeriod; REFERENCE_TIME minDevicePeriod; device.audioClient->GetDevicePeriod(&defaultDevicePeriod, &minDevicePeriod); WAVEFORMATEX customFormat = {0}; customFormat.wFormatTag = WAVE_FORMAT_IEEE_FLOAT; customFormat.nChannels = CHANNELS_PER_SAMPLE; customFormat.nSamplesPerSec = SAMPLES_PER_SECOND; customFormat.wBitsPerSample = BYTES_PER_CHANNEL*8; customFormat.nBlockAlign = (CHANNELS_PER_SAMPLE*BYTES_PER_CHANNEL); customFormat.nAvgBytesPerSec = SAMPLES_PER_SECOND*customFormat.nBlockAlign; device.audioClient->Initialize(AUDCLNT_SHAREMODE_SHARED, AUDCLNT_STREAMFLAGS_EVENTCALLBACK | AUDCLNT_STREAMFLAGS_AUTOCONVERTPCM, defaultDevicePeriod, 0, &customFormat, 0); device.audioClient->SetEventHandle(events->wakeAudioThreadEvent); device.audioClient->GetService(__uuidof(IAudioRenderClient), (void**)&device.renderClient); device.audioClient->GetBufferSize(&device.bufferSampleCount); device.audioClient->Start(); } } return device; } void destroyAudioDevice(AudioDevice* device) { if (device->enumerator) { device->enumerator->UnregisterEndpointNotificationCallback(device->notifications); device->enumerator->Release(); } if (device->notifications) delete device->notifications; if (device->renderClient) device->renderClient->Release(); if (device->audioClient) device->audioClient->Release(); if (device->endpoint) device->endpoint->Release(); } bool waitForAudioBuffer(AudioDevice* device, AudioEvents* events, AudioBuffer* out_buffer) { DWORD waitResult = WaitForSingleObject(events->wakeAudioThreadEvent, 1000); if (events->quit) return false; if (device->notifications->deviceChanged) { destroyAudioDevice(device); *device = createAudioDevice(events); } memset(out_buffer, 0, sizeof(AudioBuffer)); if (device->renderClient) { uint32_t paddingSampleCount; device->audioClient->GetCurrentPadding(&paddingSampleCount); uint32_t sampleCount = device->bufferSampleCount - paddingSampleCount; float* buffer; if (SUCCEEDED(device->renderClient->GetBuffer(sampleCount, (BYTE**)&buffer))) { out_buffer->buffer = buffer; out_buffer->sampleCount = sampleCount; out_buffer->elapsedSampleTime = sampleCount; memset(out_buffer->buffer, 0, BYTES_PER_CHANNEL*CHANNELS_PER_SAMPLE*sampleCount); } } else if (waitResult == WAIT_TIMEOUT) { out_buffer->elapsedSampleTime = SAMPLES_PER_SECOND; } return true; } void submitAudioBuffer(AudioDevice* device, AudioBuffer buffer) { if (device->renderClient) device->renderClient->ReleaseBuffer(buffer.sampleCount, 0); } // Example Program ------------------------------------------------------------- struct AudioThreadData { AudioEvents events; char sound; }; DWORD WINAPI audioThread(void* param) { AudioThreadData* data = (AudioThreadData*)param; AudioDevice device = createAudioDevice(&data->events); float t = 0; float volume = 1; float toneHerz = 0; AudioBuffer buffer = {0}; while (waitForAudioBuffer(&device, &data->events, &buffer)) { // Sine wave for (uint32_t i=0; i<buffer.sampleCount; ++i) { // Smoothly turn audio on and off so there's not clicks if (data->sound == ' ') { volume = max(0, volume - 0.01f); } else { toneHerz = data->sound * data->sound / 8; volume = min(1, volume + 0.01f); } // Render the sound buffer.buffer[i] = volume * sinf(t*3.14159f/SAMPLES_PER_SECOND); t += toneHerz; } submitAudioBuffer(&device, buffer); } destroyAudioDevice(&device); return 0; } int main(int argc, char** argv) { CoInitializeEx(nullptr, COINIT_MULTITHREADED | COINIT_DISABLE_OLE1DDE); AudioThreadData threadData = {0}; threadData.events = createAudioEvents(); HANDLE threadHandle = CreateThread(0, 0, audioThread, &threadData, 0, 0); for (int i=0; i<strlen(argv[1]); ++i) { threadData.sound = argv[1][i]; Sleep(150); } endAudioEvents(&threadData.events); WaitForSingleObject(threadHandle, INFINITE); destroyAudioEvents(&threadData.events); return 0; }